Writing this blog took considerable effort. If you enjoyed it, please consider subscribing. Feel free to leave a comment if you'd like me to cover a specific industry or function in the next post.

Additionally, I run a digital e-commerce platform for business education on Gumroad: https://karandhir8.gumroad.com. I also mentor students on https://topmate.io/karan_dhir10

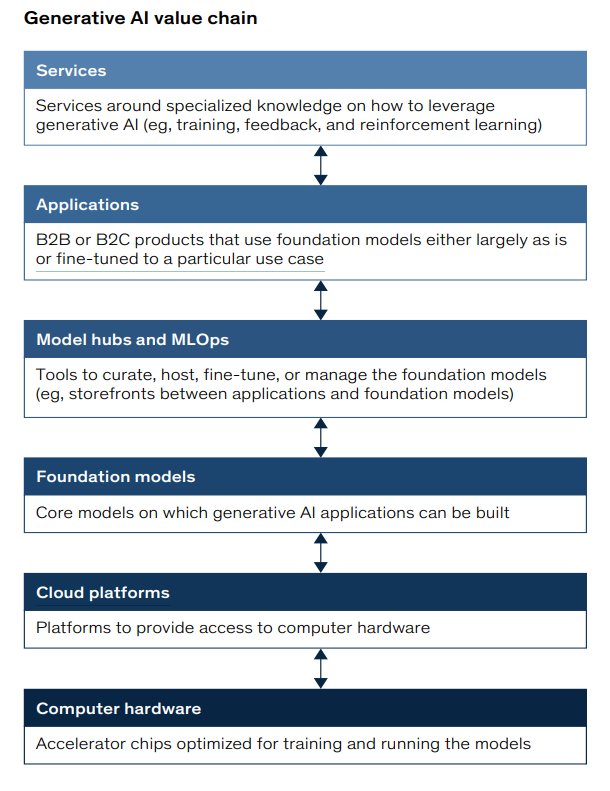

Generative AI is reshaping industries—whether you're in media, finance, consumer goods, or logistics. But most people encounter only the final application: a chatbot, a content generator, a recommendation tool. Behind this, however, lies a complex and layered value chain, each part of which contributes to the AI product you see.

1. Compute Infrastructure (Hardware)

Generative AI systems require vast amounts of knowledge to function effectively. For instance, OpenAI’s GPT-3 was trained on around 45 terabytes of text—equivalent to nearly a million feet of bookshelf space. Handling this volume of data is far beyond the capacity of standard computer hardware.

Instead, these models are trained on massive clusters of specialized chips like GPUs (Graphics Processing Units) or TPUs (Tensor Processing Units). These "accelerator" chips are built to process billions of parameters in parallel, making them ideal for the heavy computational demands of training large AI models.

Even after the foundational model is trained, businesses often use these same clusters to fine-tune the models for specific use cases or to run them within applications. However, these post-training tasks typically require much less computing power.

The market for these advanced chips is highly concentrated. NVIDIA and Google lead in chip design, while TSMC (Taiwan Semiconductor Manufacturing Company) handles most of the actual manufacturing. For newcomers, breaking into this space is difficult due to the high R&D costs and technical complexity.

2. Cloud Platforms

GPUs and TPUs—essential for training and running generative AI models—are both costly and in limited supply, making it impractical for most companies to purchase and maintain them on-site. Instead, businesses increasingly rely on the cloud to handle these compute-heavy tasks. Cloud platforms offer flexible access to the necessary processing power while allowing organizations to scale resources and control costs more efficiently.

Naturally, the leading cloud providers have become the go-to platforms for generative AI workloads, largely because they have exclusive or early access to the latest hardware.

3. Foundation Models

At the core of generative AI lie foundation models—large, versatile deep learning models that are trained to generate a specific kind of output, such as text, images, or audio. These models serve as a general-purpose base, allowing developers to build a wide range of applications on top of them. For instance, OpenAI’s GPT-3 and GPT-4 are foundation models capable of producing highly fluent text and have been integrated into numerous applications, including ChatGPT and SaaS platforms like Jasper and Copy.ai.

These models are trained using vast datasets, which can include publicly available information (from sources like Wikipedia, government portals, books, and social media) as well as proprietary data. OpenAI, for example, used images from Shutterstock to train one of its visual models, based on a formal partnership.

Creating a foundation model requires advanced capabilities across several stages:

Curating and preparing large volumes of data

Choosing the right model architecture

Training the model with high-performance computing

Tuning the model for better performance, which involves assessing the quality of its outputs and feeding that feedback back into the model.

The training process is highly resource-intensive and costly. For context, the estimated cost to train GPT-3 ranges from $4 million to $12 million.

Because of the high barriers to entry—namely, cost, expertise, and compute—the space is currently led by large tech firms and well-funded start-ups. That said, innovation is underway to develop smaller, more efficient models that can handle targeted tasks without the same heavy infrastructure. Start-ups like Anthropic have been building their own large language models and offer them as services.

In parallel, many large enterprises are showing interest in deploying LLMs within their own secure environments, motivated by concerns around data privacy and intellectual property.

4. Model Hubs & MLOps

To develop applications using foundation models, businesses need two key components:

A platform to store and access the foundation model, and

MLOps tools and infrastructure to adapt and integrate the model into their applications. This includes features such as adding and labeling new training data, and building APIs that enable applications to interact with the model.

Model hubs serve this purpose. For proprietary (closed-source) models, the original developer typically acts as the model hub, providing access through a licensed API. These providers may also offer MLOps services to help businesses fine-tune and deploy the model in various use cases.

In the case of open-source models, where the code is freely available for modification and use, third-party model hubs have emerged. Some of these hubs simply aggregate models, giving AI teams access to a variety of options, including community-modified versions. These teams can then download, refine, and deploy the models themselves. Others—such as Hugging Face and Amazon Web Services (AWS)—offer full-service platforms, including model access, fine-tuning support, and deployment tools. These offerings are especially valuable for companies that want to use generative AI but lack internal expertise or infrastructure.

Whether it’s an established software provider adding AI features or a company aiming to gain a strategic edge, the ability to effectively build on foundation models is becoming a critical capability.

5. Applications & APIs

A foundation model can perform many tasks, but it’s the applications built on it that enable specific tasks, such as customer support or drafting marketing emails.

Generative AI applications generally fall into two types:

Customizable Applications: These use foundation models with some adjustments, like creating a tailored user interface or adding search features. For example, a customer service chatbot could use a foundation model but be customized to understand a company’s specific products and services.

Fine-Tuned Models: These are foundation models adjusted with additional data to better suit a particular use case. For instance, Harvey, a legal AI app, fine-tuned OpenAI’s GPT-3 with legal data to generate better legal documents than the base model. Fine-tuning is cheaper, faster, and accessible to many businesses.

Companies can also leverage proprietary data to improve AI models. For example, a banking chatbot could use data from call-center interactions to better handle customer inquiries over time.

Generative AI has impacted several business functions, especially these areas:

IT: Automates tasks like coding and documentation. For example, GitHub Copilot uses generative AI to help developers write code faster, boosting productivity by more than 50%.

Marketing & Sales: AI will assist in creating marketing content. Jasper AI is already helping companies write blog posts, ads, and email campaigns, and it's expected that AI will generate 30% of all marketing messages in two years.

Customer Service: AI-powered chatbots like Salesforce’s Einstein and Ada can answer customer queries and offer personalized support. These systems are already improving customer experiences across various industries.

Product Development: AI accelerates prototyping. In life sciences, Insilico Medicine uses AI to shorten the drug discovery process, reducing design time from months to weeks.

Industries such as media, banking, telecommunications, and life sciences are expected to benefit the most due to their heavy investment in AI-driven IT, customer service, marketing, and product development. For instance, media companies are already using AI to generate subtitles and localize content, while banks are improving customer service through AI chatbots.

6. Services & Integration

Examples:

AI Service Providers: Companies like Accenture are evolving their AI services to include generative AI solutions, helping clients with use cases like personalized marketing content or automating customer support.

Niche Players: A startup like Atomwise uses generative AI for drug discovery, specializing in predicting molecular properties to speed up the research and development process for new drugs.

Specialized Functions: Zendesk and Salesforce are using generative AI to enhance customer service workflows by automating responses and improving personalization through AI-driven chatbots.

Proprietary Data: A company like Spotify could use proprietary user data to fine-tune a generative AI model, enabling more personalized music recommendations and content generation, setting it apart from competitors.